ResNets

Neural ODEs comes from ResNets

As these models grew to hundreds of layers deep, ResNets’ performance decreased. Deep learning had reached its limit. We need state-of-the-art performance to train deeper networks.

It also directly adds the input to the output, this shortcut connection improves the model since, at the worst, the residual block does not do anything.

One final thought. A ResNet can be described by the following equation:

$$

h_{t+1} = h_{t}+f(h_{t},\theta_{t})

$$

h - value of the hidden layer;

t - tell us which layer we are look at

the next hidden layer is the sum of the input and a function of the input as we have seen.

Find introduction to ResNets in Reference

Euler’s Method

How Neural ODEs work

Above equation seems like calculus, and if you don’t remember from calculus class, the Euler’s method is the simplest way to approximate the solution of a differential equation with initial value.

$$

Initial value problem:y’(t)=f(t,y(t)), y(t_{0})=y_{0}

$$

$$

Euler’s Method: y_{n+1}=y_{n}+hf(t_{n},y_{n})

$$

through this we can find numerical approximation

Euler’s method and ResNets equation are identical, the only difference being the step size $h$, that is multiplied by the function. Because of this similarity, we can think ResNets is underlying differential equation.

Instead of going from diffeq to Euler’s method, we can reverse engineer the problem. Starting from the ResNet, the resulting differential equation is

$$

Neural ODE: {\frac{dh(t)}{dt}} = f(h(t),t,\theta)

$$

which describes the dynamics of our model.

The Basics

how a Neural ODE works

The Neural ODEs combines two concepts: deep learning and differential equations, we use the most simple methods - Euler’s method to make predictions.

Q:

How do we train it?

A:

Adjoint method

Include using another numerical solver to run backwards through time (backpropagating) and updating the model’s parameters.

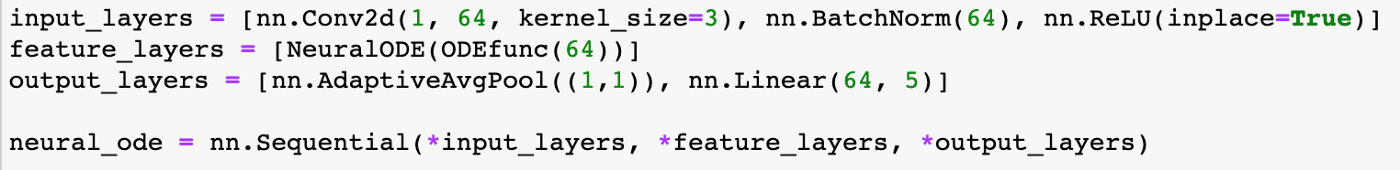

- Defining the architecture:

- Defining a neural ODE:

- Put it all together into one:

Adjoint Method

part 3 - how a Neural ODE backpropagates with the adjoint method

this part - Adjoint Method

Model Comparison

Start with a simple machine learning model to showcase its strengths and weaknesses

ResNets model with lower time per epoch, and Neural ODEs model with more time.

ResNets model with more memory, and ODE model with O(1) space usage.

Overall, one of the main benefits is the constant memory usage while training the model. However, this comes at the cost of training time.

VAE[Variational Autoencoders]

Premise:

Generative model: Able to generate samples like those in training data

VAE is a directed generative model with observed and latent variables, which give us a atent space to sample from.

In view of the application that interpolate between sentences, I will use VAE to connect RNN and ODE.

When as Inference model, the input $x$ is passed to the encoder network, producing an approximate posterior $q(z|x)$ over latent variables.

Sentence prediction by conventional autoencoder

Sentences produced by greedily decoding from points between two sentence encodings with a conventional autoencoder. The intermediate sentences are not plausible English.

VAE Language Model

Words are represented using a learned dictionary of embedding words

VAE sentence interpolation

- Paths between random points in VAE space

- Intermediate sentences are grammatical

- Topic and syntactic structure are consistent

Breakdown of another deep learning breakthrough

- First, we encode the input sequence with some “standard” time series algorithms, let’s say RNN to obtain the primary embedding of the process

- Run the embedding through the Neural ODE to get the “continuous” embedding

- Recover initial sequence from the “continuous” embedding in VAE fashion

VAE as a generative model

variational autoencoder approach

A generative model through sampling procedure:

Training :

Define Model

1 | class RNNEncoder(nn.moudle): |

1 | class NeuralODEDecoder(nn.Module): |

1 | class ODEVAE(nn.Module): |